Twitter Data Collection & Analysis#

In this lesson, we’re going to learn how to analyze and explore Twitter data with the Python/command line tool twarc. We’re specifically going to work with twarc2, which is designed for version 2 of the Twitter API (released in 2020) and the Academic Research track of the Twitter API (released in 2021), which enables researchers to collect tweets from the entire Twitter archive for free.

Twarc was developed by a project called Documenting the Now. The DocNow team develops tools and ethical frameworks for social media research.

Dataset#

[David Foster Wallace]…has become lit-bro shorthand…Make a passing reference to the “David Foster Wallace fanboy” and you can assume the reader knows whom you’re talking about.

—Molly Fischer, “David Foster Wallace, Beloved Author of Bros”

Source: Giovanni Giovanetti, NYT

Source: Giovanni Giovanetti, NYT

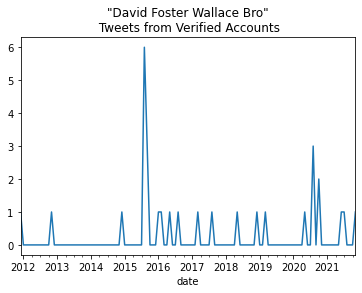

The Twitter conversation that we’re going to explore in this lesson is related to “Wallace bros” — fans of the author David Foster Wallace who are often described as “bros” or, more pointedly, “David Foster Wallace bros.”

For example, in Slate in 2015, Molly Fischer argued that David Foster Wallace’s writing — most famously his novel Infinite Jest — tended to attract a fan base of chauvinistic and misogynistic young men. But other people have defended Wallace’s fans and the author against such charges. What is a “David Foster Wallace bro”? Was DFW himself a “bro”? Who is using this phrase, how often are they using it, and why? We’re going to track this phrase and explore the varied viewpoints in this cultural conversation by analyzing tweets that mention “David Foster Wallace bro.”

Search Queries & Privacy Concerns#

To collect tweets from the Twitter API, we need to make queries, or requests for specific kinds of tweets — e.g., twarc2 search *query*. The simplest kind of query is a keyword search, such as the phrase “David Foster Wallace bro,” which should return any tweet that contains all of these words in any order — twarc2 search "David Foster Wallace bro".

There are many other operators that we can add to a query, which would allow us to collect tweets only from specific Twitter users or locations, or to only collect tweets that meet certain conditions, such as containing an image or being authored by a verified Twitter user. Here’s an excerpted table of search operators taken from Twitter’s documentation about how to build a search query. There are many other operators beyond those included in this table, and I recommend reading through Twitter’s entire web page on this subject.

Search Operator |

Explanation |

|---|---|

keyword |

Matches a keyword within the body of a Tweet. |

“exact phrase match” |

Matches the exact phrase within the body of a Tweet. |

- |

Do NOT match a keyword or operator |

# |

Matches any Tweet containing a recognized hashtag |

from:, to: |

Matches any Tweet from or to a specific user. |

place: |

Matches Tweets tagged with the specified location or Twitter place ID. |

is:reply, is:quote |

Returns only replies or quote tweets. |

is:verified |

Returns only Tweets whose authors are verified by Twitter. |

has:media |

Matches Tweets that contain a media object, such as a photo, GIF, or video, as determined by Twitter. |

has:images, has:videos |

Matches Tweets that contain a recognized URL to an image. |

has:geo |

Matches Tweets that have Tweet-specific geolocation data provided by the Twitter user. |

In this lesson, we will only be collecting tweets that were tweeted by verified users: "David Foster Wallace bro is:verified".

As I discussed in “Users’ Data: Legal & Ethical Considerations,” collecting publicly available tweets is legal, but it still raises a lot of privacy concerns and ethical quandaries — particularly when you re-publish user’s data, as I am in this lesson. To reduce potential harm to Twitter users when re-publishing or citing tweets, it can be helpful to ask for explicit permission from the authors or to focus on tweets that have already been reasonably exposed to the public (e.g., tweets with many retweets or tweets from verified users), such that re-publishing the content will not unduly increase risk to the user.

Install and Import Libraries#

Because twarc relies on Twitter’s API, we need to apply for a Twitter developer account and create a Twitter application before we can use it. You can find instructions for the application process in “Twitter API Set Up.”

If you haven’t done so already, you need to install twarc and configure twarc with your bearer token and/or API keys.

#!pip install twarc

#!twarc2 configure

To make an interactive plot, we’re also going to install the package plotly.

!pip install plotly

Then we’re going to import plotly as well as pandas

import plotly.express as px

import pandas as pd

pd.options.display.max_colwidth = 400

pd.options.display.max_columns = 90

Get Tweet Counts#

The first thing we’re going to do is retrieve “tweet counts” — that is, retrieve the number of tweets that included the phrase “David Foster Wallace bro” each day in Twitter’s history.

The tweet counts API endpoint is a convenient feature of the v2 API (first introduced in 2021) that allows us to get a sense of how many tweets will be returned for a given query before we actually collect all the tweets that match the query. We won’t get the text of the tweets or the users who tweeted the tweets or any other relevant data. We will simply get the number of tweets that match the query. This is helpful because we might be able to see that the search query “Wallace” matches too many tweets, which would encourage us to narrow our search by modifying the query.

The tweet counts API endpoint is perhaps even more useful for research projects that are primarily interested in tracking the volume of a Twitter conversation over time. In this case, tweet counts enable a researcher to retrieve this information in a way that’s faster and easier than retrieving all tweets and relevant metadata.

To get tweet counts from Twitter’s entire history with twarc2, we will use twarc2 counts followed by a search query.

We will also use the flag --csv because we want to output the data as a CSV, the flag --archive because we’re working with the Academic Research track of the Twitter API and want access to the full archive, and the flag --granularity day to get tweet counts per day (other options include hour and minute — you can see more in twarc’s documentation). Finally, we write the data to a CSV file.

!twarc2 counts "David Foster Wallace bro is:verified" --csv --archive --granularity day > twitter-data/tweet-counts.csv

We can read in this CSV file with pandas, parse the date columns, and sort from earliest to latest. The code below is largely borrowed from Ed Summers. Thanks, Ed!

Pandas Review

Do you need a refresher or introduction to the Python data analysis library Pandas? Be sure to check out Pandas Basics (1-3) in this textbook!

# Code borrowed from Ed Summers

# https://github.com/edsu/notebooks/blob/master/Black%20Lives%20Matter%20Counts.ipynb

# Read in CSV as DataFrame

tweet_counts_df = pd.read_csv('twitter-data/tweet-counts.csv', parse_dates=['start', 'end'])

# Sort values by earliest date

tweet_counts_df = tweet_counts_df.sort_values('start')

tweet_counts_df

| start | end | day_count | |

|---|---|---|---|

| 5735 | 2006-03-21 00:00:00+00:00 | 2006-03-22 00:00:00+00:00 | 0 |

| 5736 | 2006-03-22 00:00:00+00:00 | 2006-03-23 00:00:00+00:00 | 0 |

| 5737 | 2006-03-23 00:00:00+00:00 | 2006-03-24 00:00:00+00:00 | 0 |

| 5738 | 2006-03-24 00:00:00+00:00 | 2006-03-25 00:00:00+00:00 | 0 |

| 5739 | 2006-03-25 00:00:00+00:00 | 2006-03-26 00:00:00+00:00 | 0 |

| ... | ... | ... | ... |

| 26 | 2021-12-19 00:00:00+00:00 | 2021-12-20 00:00:00+00:00 | 0 |

| 27 | 2021-12-20 00:00:00+00:00 | 2021-12-21 00:00:00+00:00 | 0 |

| 28 | 2021-12-21 00:00:00+00:00 | 2021-12-22 00:00:00+00:00 | 0 |

| 29 | 2021-12-22 00:00:00+00:00 | 2021-12-23 00:00:00+00:00 | 0 |

| 30 | 2021-12-23 00:00:00+00:00 | 2021-12-23 14:32:00+00:00 | 0 |

5757 rows × 3 columns

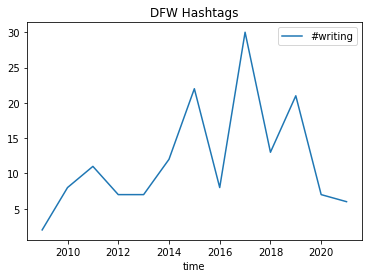

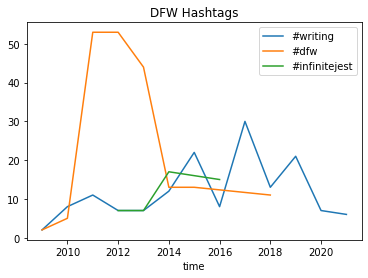

Then we can make a quick plot of tweets per day with plotly

# Code borrowed from Ed Summers

# https://github.com/edsu/notebooks/blob/master/Black%20Lives%20Matter%20Counts.ipynb

# Make a line plot from the DataFrame and specify x and y axes, axes titles, and plot title

figure = px.line(tweet_counts_df, x='start', y='day_count',

labels={'start': 'Time', 'day_count': 'Tweets per Day'},

title= 'DFW Bro Tweets'

)

figure.show()

With a plotly line chart, we can hover over points to see more information, and we can use the tool bar in the upper right corner to zoom or pan on different parts of the graph. We can also press the camera button to download an image of the graph at any pan or zoom level.

To return to the original view, double-click on the plot.

Get Tweets (Standard Track)#

To actually collect tweets and their associated metadata, we can use the command twarc2 search and insert a query.

Here we’re going to search for any tweets that mention the words “David Foster Wallace bro” and were tweeted by verified accounts in the past week. By default, twarc2 search will use the standard track of the Twitter API, which only collects tweets from the past week.

!twarc2 search "David Foster Wallace is:verified"

{"data": [{"entities": {"annotations": [{"start": 40, "end": 59, "probability": 0.961, "type": "Person", "normalized_text": "David Foster Wallace"}]}, "source": "Twitter for iPhone", "conversation_id": "1473826748169175048", "text": "\u201cEvery love story is a ghost story.\u201c\u00a0\n- David Foster Wallace", "created_at": "2021-12-23T01:24:13.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "90573676", "id": "1473826748169175048", "possibly_sensitive": false, "public_metrics": {"retweet_count": 13, "reply_count": 1, "like_count": 55, "quote_count": 2}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "67", "name": "Interests and Hobbies", "description": "Interests, opinions, and behaviors of individuals, groups, or cultures; like Speciality Cooking or Theme Parks"}, "entity": {"id": "1278071502840066048", "name": "Paranormal"}}]}, {"entities": {"annotations": [{"start": 32, "end": 51, "probability": 0.6432, "type": "Person", "normalized_text": "David Foster Wallace"}], "urls": [{"start": 137, "end": 160, "url": "https://t.co/uP4U30fkqv", "expanded_url": "https://www.backstage.com/magazine/article/voiceover-casting-68077/?utm_campaign=organic&utm_content=casting%2Clink%2Cstock-misc%2Ctalent%2Cvoiceover&utm_medium=social&utm_source=twitter", "display_url": "backstage.com/magazine/artic\u2026"}]}, "source": "Sprout Social", "conversation_id": "1473762018855370755", "text": "From a short film adaptation of David Foster Wallace Story to a feature film and everything in between, here\u2019s what\u2019s casting this week. https://t.co/uP4U30fkqv", "created_at": "2021-12-22T21:07:01.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "19920585", "id": "1473762018855370755", "possibly_sensitive": false, "public_metrics": {"retweet_count": 0, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"source": "Twitter Web App", "conversation_id": "1473587774821437441", "entities": {"mentions": [{"start": 0, "end": 11, "username": "glennndiaz", "id": "917615998047367168"}]}, "text": "@glennndiaz Hahahaha di ko pa nga binabasa Wikipedia page niya. Before I read The Corrections, akala ko iisa lang sila ni David Foster Wallace hahahaha", "created_at": "2021-12-22T10:06:25.000Z", "lang": "tl", "in_reply_to_user_id": "917615998047367168", "reply_settings": "everyone", "author_id": "37662244", "id": "1473595773400981504", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1473591381436162052"}], "public_metrics": {"retweet_count": 0, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"entities": {"annotations": [{"start": 16, "end": 35, "probability": 0.7984, "type": "Person", "normalized_text": "david foster wallace"}, {"start": 54, "end": 65, "probability": 0.9346, "type": "Person", "normalized_text": "david foster"}], "mentions": [{"start": 3, "end": 14, "username": "el_fodongo", "id": "966631615"}]}, "source": "Twitter for iPhone", "conversation_id": "1473360294898548738", "text": "RT @el_fodongo: david foster wallace's dog was called david foster gromit", "created_at": "2021-12-21T18:30:42.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "86101978", "id": "1473360294898548738", "possibly_sensitive": false, "referenced_tweets": [{"type": "retweeted", "id": "1392179447051165696"}], "public_metrics": {"retweet_count": 192, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"entities": {"annotations": [{"start": 16, "end": 35, "probability": 0.7984, "type": "Person", "normalized_text": "david foster wallace"}, {"start": 54, "end": 65, "probability": 0.9346, "type": "Person", "normalized_text": "david foster"}], "mentions": [{"start": 3, "end": 14, "username": "el_fodongo", "id": "966631615"}]}, "source": "Twitter Web App", "conversation_id": "1473359224453623808", "text": "RT @el_fodongo: david foster wallace's dog was called david foster gromit", "created_at": "2021-12-21T18:26:27.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "76718852", "id": "1473359224453623808", "possibly_sensitive": false, "referenced_tweets": [{"type": "retweeted", "id": "1392179447051165696"}], "public_metrics": {"retweet_count": 192, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"entities": {"annotations": [{"start": 84, "end": 103, "probability": 0.9894, "type": "Person", "normalized_text": "David Foster Wallace"}, {"start": 109, "end": 124, "probability": 0.8558, "type": "Person", "normalized_text": "\u00d3scar Villasante"}], "mentions": [{"start": 3, "end": 19, "username": "Notodofilmfest_", "id": "161988497"}, {"start": 49, "end": 63, "username": "camaraabierta", "id": "52823380"}]}, "source": "Twitter for iPhone", "conversation_id": "1473292247873593345", "text": "RT @Notodofilmfest_: \u2728Premio al Mejor Documental\u2728@camaraabierta\n\"Peque\u00f1o Homenaje a David Foster Wallace\" de \u00d3scar Villasante\nhttps://t.co/\u2026", "created_at": "2021-12-21T14:00:18.000Z", "lang": "es", "reply_settings": "everyone", "author_id": "52823380", "id": "1473292247873593345", "possibly_sensitive": false, "referenced_tweets": [{"type": "retweeted", "id": "1473269378565160974"}], "public_metrics": {"retweet_count": 1, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"source": "Twitter for iPhone", "conversation_id": "1472984031864279045", "entities": {"mentions": [{"start": 0, "end": 14, "username": "TomA3aenssens", "id": "1189576208"}, {"start": 15, "end": 30, "username": "WimOosterlinck", "id": "53060760"}]}, "text": "@TomA3aenssens @WimOosterlinck De auteur die de doorslag zou geven, is David Foster Wallace, maar die staat bij de fictie.", "created_at": "2021-12-20T17:54:22.000Z", "lang": "nl", "in_reply_to_user_id": "1189576208", "reply_settings": "everyone", "author_id": "292945752", "id": "1472988761785049090", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1472987840288116740"}], "public_metrics": {"retweet_count": 0, "reply_count": 0, "like_count": 3, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"entities": {"annotations": [{"start": 46, "end": 57, "probability": 0.7361, "type": "Person", "normalized_text": "Bill Shankly"}, {"start": 60, "end": 79, "probability": 0.9075, "type": "Person", "normalized_text": "David Foster Wallace"}, {"start": 82, "end": 97, "probability": 0.9925, "type": "Person", "normalized_text": "Marina Tsvetaeva"}, {"start": 100, "end": 105, "probability": 0.9963, "type": "Person", "normalized_text": "Eminem"}, {"start": 112, "end": 125, "probability": 0.9834, "type": "Person", "normalized_text": "Kenny Dalglish"}], "urls": [{"start": 169, "end": 192, "url": "https://t.co/rirr55pD5O", "expanded_url": "https://www.amazon.co.uk/gp/aw/d/1781724253/", "display_url": "amazon.co.uk/gp/aw/d/178172\u2026"}]}, "source": "Twitter for iPhone", "conversation_id": "1472930896223383559", "text": "Find me another book that assembles a cast of Bill Shankly, David Foster Wallace, Marina Tsvetaeva, Eminem, and Kenny Dalglish. A unique stocking filler, or so I hear.\n\nhttps://t.co/rirr55pD5O", "created_at": "2021-12-20T14:04:26.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "2435437579", "id": "1472930896223383559", "possibly_sensitive": false, "public_metrics": {"retweet_count": 1, "reply_count": 0, "like_count": 1, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1095842197574844416", "name": "Kenny Dalglish"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "92", "name": "Sports Personality", "description": "A Sports Personality like 'Skip Bayless'"}, "entity": {"id": "1095842197574844416", "name": "Kenny Dalglish"}}, {"domain": {"id": "92", "name": "Sports Personality", "description": "A Sports Personality like 'Skip Bayless'"}, "entity": {"id": "1202239681422774272", "name": "Sports icons"}}, {"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "808389766676811780", "name": "Eminem", "description": "Eminem"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "808389766676811780", "name": "Eminem", "description": "Eminem"}}, {"domain": {"id": "55", "name": "Music Genre", "description": "A category for a musical style, like Pop, Rock, or Rap"}, "entity": {"id": "810937888334487552", "name": "Rap", "description": "Hip-Hop/Rap"}}]}, {"source": "Twitter for Android", "conversation_id": "1472600656787742725", "entities": {"mentions": [{"start": 0, "end": 13, "username": "george_salis", "id": "1357934563838812161"}, {"start": 14, "end": 30, "username": "TheLastSisyphus", "id": "340072715"}], "urls": [{"start": 52, "end": 75, "url": "https://t.co/nLbntaS24J", "expanded_url": "https://sites.utexas.edu/ransomcentermagazine/2016/01/29/david-foster-wallace-and-20-years-of-infinite-jest/", "display_url": "sites.utexas.edu/ransomcenterma\u2026"}]}, "text": "@george_salis @TheLastSisyphus Also something here. https://t.co/nLbntaS24J", "created_at": "2021-12-19T17:58:24.000Z", "lang": "en", "in_reply_to_user_id": "1357934563838812161", "reply_settings": "everyone", "author_id": "17853057", "id": "1472627388911538176", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1472604673962037250"}], "public_metrics": {"retweet_count": 0, "reply_count": 2, "like_count": 1, "quote_count": 0}}, {"entities": {"annotations": [{"start": 33, "end": 52, "probability": 0.9895, "type": "Person", "normalized_text": "David Foster Wallace"}], "urls": [{"start": 90, "end": 113, "url": "https://t.co/poOtnWcmsc", "expanded_url": "https://twitter.com/velazquera/status/1471895454103314433", "display_url": "twitter.com/velazquera/sta\u2026"}]}, "source": "Twitter for iPhone", "conversation_id": "1471945897038729217", "text": "Estoy tan feliz: mi ensayo sobre David Foster Wallace est\u00e1 incluido en esta publicaci\u00f3n \ud83e\udd70 https://t.co/poOtnWcmsc", "created_at": "2021-12-17T20:50:23.000Z", "lang": "es", "reply_settings": "everyone", "author_id": "45840741", "id": "1471945897038729217", "possibly_sensitive": false, "referenced_tweets": [{"type": "quoted", "id": "1471895454103314433"}], "public_metrics": {"retweet_count": 1, "reply_count": 2, "like_count": 18, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "65", "name": "Interests and Hobbies Vertical", "description": "Top level interests and hobbies groupings, like Food or Travel"}, "entity": {"id": "1280550787207147521", "name": "Arts & culture"}}]}, {"entities": {"annotations": [{"start": 34, "end": 48, "probability": 0.7699, "type": "Organization", "normalized_text": "Emerson College"}, {"start": 51, "end": 70, "probability": 0.8445, "type": "Person", "normalized_text": "David Foster Wallace"}], "mentions": [{"start": 0, "end": 13, "username": "slentine2010", "id": "1681097215"}], "urls": [{"start": 88, "end": 111, "url": "https://t.co/LYHGQfxvgC", "expanded_url": "https://www.esquire.com/entertainment/movies/a15334056/paul-thomas-anderson-david-foster-wallace-reddit/", "display_url": "esquire.com/entertainment/\u2026"}]}, "source": "Twitter Web App", "conversation_id": "1471842268072095754", "text": "@slentine2010 And when he went to Emerson College, David Foster Wallace was his teacher https://t.co/LYHGQfxvgC", "created_at": "2021-12-17T18:06:28.000Z", "lang": "en", "in_reply_to_user_id": "1681097215", "reply_settings": "everyone", "author_id": "29092660", "id": "1471904645836591104", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1471842268072095754"}], "public_metrics": {"retweet_count": 0, "reply_count": 1, "like_count": 1, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"entities": {"annotations": [{"start": 56, "end": 71, "probability": 0.9829, "type": "Person", "normalized_text": "Jeffrey Lawrence"}, {"start": 76, "end": 82, "probability": 0.3995, "type": "Organization", "normalized_text": "US Open"}, {"start": 85, "end": 104, "probability": 0.9748, "type": "Person", "normalized_text": "David Foster Wallace"}], "mentions": [{"start": 29, "end": 44, "username": "Ariz_Quarterly", "id": "789573528458670080"}], "urls": [{"start": 146, "end": 169, "url": "https://t.co/iham6vILfC", "expanded_url": "https://bit.ly/3q8k8ly", "display_url": "bit.ly/3q8k8ly", "images": [{"url": "https://pbs.twimg.com/news_img/1471231338040680452/xtA_HeTF?format=jpg&name=orig", "width": 600, "height": 910}, {"url": "https://pbs.twimg.com/news_img/1471231338040680452/xtA_HeTF?format=jpg&name=150x150", "width": 150, "height": 150}], "status": 200, "title": "Article", "description": "In lieu of an abstract, here is a brief excerpt of the content: Postmodernist discourses are often exclusionary even when, having been accused of lacking concrete relevance, they call attention to and appropriate the experience of \u201cdifference\u201d and \u201cotherness\u201d in order to provide themselves with oppositional political meaning, legitimacy, and immediacy. Very few African-American intellectuals have talked or written about postmodernism. Recently at a dinner party, I talked about trying to grapple with the", "unwound_url": "https://muse.jhu.edu/article/27283?utm_source=twitter&utm_medium=social&utm_campaign=arq121721&utm_content=JHUP"}, {"start": 170, "end": 193, "url": "https://t.co/SrLkMELsYj", "expanded_url": "https://twitter.com/JHUPress/status/1471857768462176268/photo/1", "display_url": "pic.twitter.com/SrLkMELsYj"}]}, "source": "Hootsuite Inc.", "conversation_id": "1471857768462176268", "attachments": {"media_keys": ["3_1471857766570549250"]}, "text": "OUT NOW: The latest issue of @Ariz_Quarterly, including Jeffrey Lawrence's \"US Open? David Foster Wallace, Tennis and the Crisis of Meritocracy\": https://t.co/iham6vILfC https://t.co/SrLkMELsYj", "created_at": "2021-12-17T15:00:12.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "29731124", "id": "1471857768462176268", "possibly_sensitive": false, "public_metrics": {"retweet_count": 0, "reply_count": 0, "like_count": 0, "quote_count": 0}, "context_annotations": [{"domain": {"id": "3", "name": "TV Shows", "description": "Television shows from around the world"}, "entity": {"id": "10044747023", "name": "US Open"}}, {"domain": {"id": "6", "name": "Sports Event"}, "entity": {"id": "1398019721518219264", "name": "U.S. Open Tennis"}}, {"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"source": "Twitter for iPhone", "conversation_id": "1471808169630392327", "text": "\u201cLa verit\u00e0 ti render\u00e0 libero. Ma solo quando avr\u00e0 finito con te\u201d\n\nDavid Foster Wallace, Infinite Jest", "created_at": "2021-12-17T11:43:07.000Z", "lang": "it", "reply_settings": "everyone", "author_id": "1311755732782657536", "id": "1471808169630392327", "possibly_sensitive": false, "public_metrics": {"retweet_count": 5, "reply_count": 0, "like_count": 37, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}], "includes": {"users": [{"location": "New York City", "profile_image_url": "https://pbs.twimg.com/profile_images/797105077219639296/sIN2ZMVs_normal.jpg", "created_at": "2009-11-17T06:00:39.000Z", "pinned_tweet_id": "1239894564149891072", "verified": true, "username": "jerrysaltz", "name": "Jerry Saltz", "protected": false, "description": "Jerry Saltz: Senior Art Critic; New York Magazine. 2018 Pulitzer Prize in Criticism. Author of NYT Best Seller \u201cHow To Be an Artist.\u201d 2-time ASME award winner.", "id": "90573676", "url": "", "public_metrics": {"followers_count": 533796, "following_count": 3991, "tweet_count": 61093, "listed_count": 2343}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/1230605399491043331/u_OcQ5bL_normal.png", "created_at": "2009-02-02T18:20:38.000Z", "pinned_tweet_id": "1461016382120808463", "verified": true, "username": "Backstage", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/VBU3lmc1t6", "expanded_url": "http://www.backstage.com", "display_url": "backstage.com"}]}, "description": {"hashtags": [{"start": 71, "end": 80, "tag": "IGotCast"}]}}, "name": "Backstage", "protected": false, "description": "The most trusted name in casting since 1960. Tweet us with the hashtag #IGotCast and let us know if you've been cast through Backstage!", "id": "19920585", "url": "https://t.co/VBU3lmc1t6", "public_metrics": {"followers_count": 137997, "following_count": 2780, "tweet_count": 76552, "listed_count": 1755}}, {"location": "Philippines", "profile_image_url": "https://pbs.twimg.com/profile_images/1466664667154370565/F8gd8lHJ_normal.jpg", "created_at": "2009-05-04T13:45:10.000Z", "pinned_tweet_id": "1458400249605541893", "verified": true, "username": "jodeszgavilan", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/TOWtr0cvoo", "expanded_url": "https://rappler.com/author/jodesz-gavilan", "display_url": "rappler.com/author/jodesz-\u2026"}]}, "description": {"hashtags": [{"start": 133, "end": 149, "tag": "StopTheKillings"}], "mentions": [{"start": 52, "end": 66, "username": "rapplerdotcom"}, {"start": 67, "end": 79, "username": "newsbreakph"}]}}, "name": "Jodesz Gavilan", "protected": false, "description": "Journalist covering human rights, impunity, etc for @rapplerdotcom @newsbreakph. Host, Newsbreak: Beyond the Stories. Books + OSINT. #StopTheKillings \ud83c\uddf5\ud83c\udded", "id": "37662244", "url": "https://t.co/TOWtr0cvoo", "public_metrics": {"followers_count": 2711, "following_count": 772, "tweet_count": 100313, "listed_count": 66}}, {"location": "Adelaide / Manila", "profile_image_url": "https://pbs.twimg.com/profile_images/1383967272000049156/5oRmdeoJ_normal.jpg", "created_at": "2017-10-10T05:01:17.000Z", "pinned_tweet_id": "1279417624296779776", "verified": false, "username": "glennndiaz", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/EADMN6S6RM", "expanded_url": "http://glenndiaz.ph", "display_url": "glenndiaz.ph"}]}, "description": {"mentions": [{"start": 5, "end": 17, "username": "ateneopress"}, {"start": 28, "end": 42, "username": "UniofAdelaide"}]}}, "name": "Glenn Diaz", "protected": false, "description": "TQO (@ateneopress) PhD cand @UniofAdelaide Anti-imperialist \u262d", "id": "917615998047367168", "url": "https://t.co/EADMN6S6RM", "public_metrics": {"followers_count": 3400, "following_count": 708, "tweet_count": 25599, "listed_count": 10}}, {"location": "mollie.goodfellow@gmail.com", "profile_image_url": "https://pbs.twimg.com/profile_images/1466014663808856065/CgekXjsd_normal.jpg", "created_at": "2009-10-29T16:13:14.000Z", "verified": true, "username": "hansmollman", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/qFKl4ydBYd", "expanded_url": "http://molliegoodfellow.co.uk", "display_url": "molliegoodfellow.co.uk"}]}}, "name": "Mollie Goodfellow", "protected": false, "description": "a writer for many things. cute and precariously employed. it\u2019s probably a joke. she/her.", "id": "86101978", "url": "https://t.co/qFKl4ydBYd", "public_metrics": {"followers_count": 80169, "following_count": 2992, "tweet_count": 85704, "listed_count": 379}}, {"location": "The arboreal dell. (he/him) ", "profile_image_url": "https://pbs.twimg.com/profile_images/1445524623253139462/h_BdjALu_normal.jpg", "created_at": "2012-11-23T18:41:40.000Z", "pinned_tweet_id": "1469967027158802435", "verified": false, "username": "el_fodongo", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/gAIIBvAWyR", "expanded_url": "https://ko-fi.com/timmacgabhann", "display_url": "ko-fi.com/timmacgabhann"}]}, "description": {"mentions": [{"start": 84, "end": 92, "username": "wnbooks"}]}}, "name": "tim, the chancellor of christmas and germany also", "protected": false, "description": "slime man, mulch lord and full-time mayor / wrote CALL HIM MINE & HOW TO BE NOWHERE @wnbooks / i have a v over-zealous block bot, sorry in advance", "id": "966631615", "url": "https://t.co/gAIIBvAWyR", "public_metrics": {"followers_count": 3951, "following_count": 1449, "tweet_count": 22659, "listed_count": 0}}, {"location": "Cardiff/London", "profile_image_url": "https://pbs.twimg.com/profile_images/750985536043638784/YDfIaPP3_normal.jpg", "created_at": "2009-09-23T18:38:17.000Z", "pinned_tweet_id": "1355115824076500992", "verified": true, "username": "tristandross", "entities": {"description": {"mentions": [{"start": 29, "end": 36, "username": "online"}]}}, "name": "Stan Account", "protected": false, "description": "having a laugh correspondent @online", "id": "76718852", "url": "", "public_metrics": {"followers_count": 25204, "following_count": 1067, "tweet_count": 74514, "listed_count": 100}}, {"location": "TVE", "profile_image_url": "https://pbs.twimg.com/profile_images/1342186399211130884/knZ4MAVh_normal.jpg", "created_at": "2009-07-01T19:26:59.000Z", "verified": true, "username": "camaraabierta", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/9FbNCB4LV4", "expanded_url": "http://www.rtve.es/camaraabierta", "display_url": "rtve.es/camaraabierta"}]}, "description": {"hashtags": [{"start": 143, "end": 156, "tag": "Canal24Horas"}], "mentions": [{"start": 12, "end": 17, "username": "rtve"}, {"start": 81, "end": 92, "username": "danisesena"}]}}, "name": "C\u00e1mara abierta", "protected": false, "description": "Programa de @rtve sobre internet como plataforma de creaci\u00f3n. Dirige y presenta: @danisesena. Emisi\u00f3n: S\u00e1bados 08:30h 16:45h y martes 00:45 en #Canal24Horas", "id": "52823380", "url": "https://t.co/9FbNCB4LV4", "public_metrics": {"followers_count": 20363, "following_count": 1112, "tweet_count": 4238, "listed_count": 538}}, {"location": "La red", "profile_image_url": "https://pbs.twimg.com/profile_images/1341417142487576579/tdwXlqkF_normal.jpg", "created_at": "2010-07-02T09:05:50.000Z", "pinned_tweet_id": "1473262330859429894", "verified": false, "username": "Notodofilmfest_", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/rVtgyqbvOl", "expanded_url": "https://linktr.ee/notodofilmfest", "display_url": "linktr.ee/notodofilmfest"}]}, "description": {"mentions": [{"start": 11, "end": 22, "username": "la_fabrica"}, {"start": 25, "end": 38, "username": "fesserjavier"}]}}, "name": "Notodofilmfest", "protected": false, "description": "Creado por @la_fabrica y @fesserjavier Notodofilmfest21 \ud83d\ude80 20/12 Gala de entrega de premios", "id": "161988497", "url": "https://t.co/rVtgyqbvOl", "public_metrics": {"followers_count": 10313, "following_count": 558, "tweet_count": 9429, "listed_count": 299}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/1349413607403098117/Y_PkYFLR_normal.jpg", "created_at": "2011-05-04T14:10:06.000Z", "verified": true, "username": "jdceulaer", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/EjGthWzarD", "expanded_url": "http://www.demorgen.be", "display_url": "demorgen.be"}]}, "description": {"mentions": [{"start": 45, "end": 53, "username": "mboudry"}]}}, "name": "Jo\u00ebl De Ceulaer", "protected": false, "description": "Journalist / \u2018Eerste hulp bij pandemie' (met @mboudry, 2021) / \u2018De tragiek van de macht\u2019 (2020) / 'Hoera! De democratie is niet perfect' (2019)", "id": "292945752", "url": "https://t.co/EjGthWzarD", "public_metrics": {"followers_count": 56565, "following_count": 2129, "tweet_count": 72788, "listed_count": 444}}, {"location": "Antwerpen, Belgi\u00eb", "profile_image_url": "https://pbs.twimg.com/profile_images/1452300902350655488/4iukPsHy_normal.jpg", "created_at": "2013-02-17T13:46:33.000Z", "pinned_tweet_id": "1294333815255638021", "verified": false, "username": "TomA3aenssens", "entities": {"description": {"hashtags": [{"start": 67, "end": 82, "tag": "Triggerwarning"}]}}, "name": "Kerstronk Adriaenssens \ud83d\udc45\ud83e\udd1f\ud83c\udfff", "protected": false, "description": "\u2018vuilblond en proper rechts\u2019 Antwerpen, Antwaarps \u00e9n Antwerp ! \ud83d\udd34\u26aa\ufe0f #Triggerwarning !", "id": "1189576208", "url": "", "public_metrics": {"followers_count": 3160, "following_count": 1881, "tweet_count": 55698, "listed_count": 8}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/1280751248006885377/v9YWpADl_normal.jpg", "created_at": "2009-07-02T13:24:22.000Z", "pinned_tweet_id": "1442908778890883072", "verified": false, "username": "WimOosterlinck", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/dvvz9avo2m", "expanded_url": "http://wimoosterlinck.be", "display_url": "wimoosterlinck.be"}]}, "description": {"hashtags": [{"start": 44, "end": 64, "tag": "ikwildegeenkinderen"}, {"start": 65, "end": 76, "tag": "drieboeken"}]}}, "name": "Wim Oosterlinck \ud83c\udf99", "protected": false, "description": "radio - podcast - film - boeken - wijs volk #ikwildegeenkinderen #drieboeken", "id": "53060760", "url": "https://t.co/dvvz9avo2m", "public_metrics": {"followers_count": 57957, "following_count": 381, "tweet_count": 16487, "listed_count": 176}}, {"location": "Sheffield, UK", "profile_image_url": "https://pbs.twimg.com/profile_images/1463200677577084931/A9XtZIPd_normal.jpg", "created_at": "2014-04-09T13:09:24.000Z", "pinned_tweet_id": "1465959871011074050", "verified": true, "username": "BenWilko85", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/UMQgu99gKl", "expanded_url": "http://theguardian.com/profile/ben-wilkinson", "display_url": "theguardian.com/profile/ben-wi\u2026"}]}, "description": {"mentions": [{"start": 83, "end": 94, "username": "SerenBooks"}, {"start": 128, "end": 140, "username": "LivUniPress"}]}}, "name": "Ben Wilkinson", "protected": false, "description": "Writer, poet, foolhardy critic. Poems: Way More Than Luck (2018), Same Difference (@SerenBooks, 2022). Criticism: Don Paterson (@LivUniPress, 2021).", "id": "2435437579", "url": "https://t.co/UMQgu99gKl", "public_metrics": {"followers_count": 2153, "following_count": 1320, "tweet_count": 5450, "listed_count": 20}}, {"location": "Sydney", "profile_image_url": "https://pbs.twimg.com/profile_images/1421821650366918656/S9sIijlN_normal.jpg", "created_at": "2008-12-03T23:35:17.000Z", "pinned_tweet_id": "1466212849865224192", "verified": true, "username": "mclayfield", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/FjTEjwA8h6", "expanded_url": "http://www.matthewclayfield.com/", "display_url": "matthewclayfield.com"}]}}, "name": "Matthew Clayfield", "protected": false, "description": "Lapsed journalist. Occasional critic and screenwriter. Would like to be a full-time novelist.", "id": "17853057", "url": "https://t.co/FjTEjwA8h6", "public_metrics": {"followers_count": 4386, "following_count": 1963, "tweet_count": 14416, "listed_count": 170}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/1357934982866558979/LnmUTf3C_normal.jpg", "created_at": "2021-02-06T06:10:16.000Z", "pinned_tweet_id": "1467198887656054786", "verified": false, "username": "george_salis", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/SDAGWoFwZR", "expanded_url": "https://linktr.ee/georgesalis", "display_url": "linktr.ee/georgesalis"}]}}, "name": "George Salis", "protected": false, "description": "I'm the author of Sea Above, Sun Below. I'm currently working on a maximalist novel titled Morphological Echoes. I run The Collidescope.", "id": "1357934563838812161", "url": "https://t.co/SDAGWoFwZR", "public_metrics": {"followers_count": 473, "following_count": 106, "tweet_count": 1294, "listed_count": 4}}, {"location": "Dept. of Unspecified Services", "profile_image_url": "https://pbs.twimg.com/profile_images/1472014753103028225/33Rr7X2S_normal.jpg", "created_at": "2011-07-22T03:01:12.000Z", "pinned_tweet_id": "1460666619349409800", "verified": false, "username": "TheLastSisyphus", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/oGjVFmtx4k", "expanded_url": "https://linktr.ee/TheLastSisyphusPodcast", "display_url": "linktr.ee/TheLastSisyphu\u2026"}]}}, "name": "C.G.", "protected": false, "description": "Author of PROJECT: SLEEPLESS DREAM | Writer | Teacher | Armchair Philosopher | UFO Crackpot | Hockey coach, player, and fan", "id": "340072715", "url": "https://t.co/oGjVFmtx4k", "public_metrics": {"followers_count": 1542, "following_count": 292, "tweet_count": 5296, "listed_count": 23}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/1472285559989194764/S-ErLzy0_normal.jpg", "created_at": "2009-06-09T13:54:11.000Z", "pinned_tweet_id": "680849321445597185", "verified": true, "username": "Aglaia_Berlutti", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/CnXF4UtMRb", "expanded_url": "http://www.aglaiaberlutti.com", "display_url": "aglaiaberlutti.com"}]}}, "name": "Aglaia Berlutti", "protected": false, "description": "Bruja y hereje. A veces grosera y quiz\u00e1 demente. Fot\u00f3grafa por pasi\u00f3n, amante de las palabras por convicci\u00f3n. Firme creyente en el poder del pensamiento libre.", "id": "45840741", "url": "https://t.co/CnXF4UtMRb", "public_metrics": {"followers_count": 28510, "following_count": 7146, "tweet_count": 364749, "listed_count": 333}}, {"location": "Caracas", "profile_image_url": "https://pbs.twimg.com/profile_images/1472157338656288772/aJgA76-t_normal.jpg", "created_at": "2016-11-08T14:59:11.000Z", "pinned_tweet_id": "1076067402289434624", "verified": false, "username": "velazquera", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/cGCgRnNTtp", "expanded_url": "https://anamariavelazquezanderson.blogspot.com/", "display_url": "anamariavelazquezanderson.blogspot.com"}]}}, "name": "Ana Mar\u00eda Vel\u00e1zquez Anderson", "protected": false, "description": "Profa de Literatura Universidad Metropolitana.\nGender studies\nEscritora.", "id": "796004142007324672", "url": "https://t.co/cGCgRnNTtp", "public_metrics": {"followers_count": 569, "following_count": 746, "tweet_count": 2528, "listed_count": 4}}, {"location": "Boston, Massachusetts", "profile_image_url": "https://pbs.twimg.com/profile_images/1467838713690525706/8PrRis4h_normal.jpg", "created_at": "2009-04-05T23:26:03.000Z", "pinned_tweet_id": "1470414586776072194", "verified": true, "username": "JahHills", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/m6CCXce0pr", "expanded_url": "https://linktr.ee/ryanhwalsh", "display_url": "linktr.ee/ryanhwalsh"}]}, "description": {"mentions": [{"start": 72, "end": 85, "username": "penguinpress"}]}}, "name": "Ryan H. Walsh", "protected": false, "description": "Writer/Musician \u2738 Hallelujah The Hills (the band) \u2738 Astral Weeks (2018 @penguinpress) \u2738 I'll tell you the whole story as soon as we sit down.", "id": "29092660", "url": "https://t.co/m6CCXce0pr", "public_metrics": {"followers_count": 5772, "following_count": 2554, "tweet_count": 62921, "listed_count": 135}}, {"profile_image_url": "https://pbs.twimg.com/profile_images/378800000318879657/b61db9b1fc6981d676a363d6cf0b2318_normal.jpeg", "created_at": "2013-08-18T16:10:36.000Z", "verified": false, "username": "slentine2010", "name": "Scott Lentine", "protected": false, "description": "Autism self-advocate. Poet. I love dogs, traveling, advocating for autism, playing the piano, making new friends, and the beach.", "id": "1681097215", "url": "", "public_metrics": {"followers_count": 510, "following_count": 4081, "tweet_count": 7250, "listed_count": 3}}, {"location": "Baltimore, MD", "profile_image_url": "https://pbs.twimg.com/profile_images/666258588776591360/CbjPccui_normal.jpg", "created_at": "2009-04-08T14:50:18.000Z", "verified": true, "username": "JHUPress", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/HmAC3AEXHK", "expanded_url": "http://www.press.jhu.edu/", "display_url": "press.jhu.edu"}]}, "description": {"mentions": [{"start": 135, "end": 147, "username": "ProjectMUSE"}]}}, "name": "Johns Hopkins University Press", "protected": false, "description": "Publishing cutting edge books and journals since 1878. We envision a future where knowledge enriches the life of every person. Home to @ProjectMUSE.", "id": "29731124", "url": "https://t.co/HmAC3AEXHK", "public_metrics": {"followers_count": 21464, "following_count": 1679, "tweet_count": 19287, "listed_count": 657}}, {"location": "Tucson, AZ", "profile_image_url": "https://pbs.twimg.com/profile_images/969262678043631616/pSwjVKeh_normal.jpg", "created_at": "2016-10-21T21:06:13.000Z", "verified": false, "username": "Ariz_Quarterly", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/o4sshEKfRT", "expanded_url": "https://azq.arizona.edu", "display_url": "azq.arizona.edu"}]}}, "name": "Arizona Quarterly", "protected": false, "description": "Publishing cultural, formal, & theoretical interventions in current academic conversations around American lit, broadly defined.", "id": "789573528458670080", "url": "https://t.co/o4sshEKfRT", "public_metrics": {"followers_count": 357, "following_count": 1080, "tweet_count": 519, "listed_count": 5}}, {"location": "Roma", "profile_image_url": "https://pbs.twimg.com/profile_images/1338569526871543811/metxtMMn_normal.jpg", "created_at": "2020-10-01T19:52:03.000Z", "pinned_tweet_id": "1412324780783636486", "verified": true, "username": "AndreaVenanzoni", "entities": {"url": {"urls": [{"start": 0, "end": 23, "url": "https://t.co/Ack6c8IoCd", "expanded_url": "http://www.forumnazionaleprofessioni.it", "display_url": "forumnazionaleprofessioni.it"}]}, "description": {"mentions": [{"start": 49, "end": 64, "username": "LDO_Fondazione"}, {"start": 67, "end": 80, "username": "atlanticomag"}, {"start": 82, "end": 94, "username": "ilfoglio_it"}, {"start": 96, "end": 100, "username": "tpi"}]}}, "name": "Andrea Venanzoni", "protected": false, "description": "Paleolibertarian, Centro Studi PRAXIS, scrivo su @LDO_Fondazione , @atlanticomag, @ilfoglio_it, @tpi", "id": "1311755732782657536", "url": "https://t.co/Ack6c8IoCd", "public_metrics": {"followers_count": 5200, "following_count": 1958, "tweet_count": 10805, "listed_count": 22}}], "tweets": [{"entities": {"annotations": [{"start": 37, "end": 43, "probability": 0.8885, "type": "Person", "normalized_text": "Franzen"}], "mentions": [{"start": 0, "end": 14, "username": "jodeszgavilan", "id": "37662244"}]}, "source": "Twitter for iPhone", "conversation_id": "1473587774821437441", "text": "@jodeszgavilan Congrats on coming to Franzen w/o the burden of his other shenanigans hahah.", "created_at": "2021-12-22T09:48:57.000Z", "lang": "en", "in_reply_to_user_id": "37662244", "reply_settings": "everyone", "author_id": "917615998047367168", "id": "1473591381436162052", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1473590214342094848"}], "public_metrics": {"retweet_count": 0, "reply_count": 1, "like_count": 0, "quote_count": 0}}, {"entities": {"annotations": [{"start": 0, "end": 19, "probability": 0.8219, "type": "Person", "normalized_text": "david foster wallace"}, {"start": 38, "end": 49, "probability": 0.9263, "type": "Person", "normalized_text": "david foster"}]}, "source": "Twitter Web App", "conversation_id": "1392179447051165696", "text": "david foster wallace's dog was called david foster gromit", "created_at": "2021-05-11T18:07:00.000Z", "lang": "en", "reply_settings": "everyone", "author_id": "966631615", "id": "1392179447051165696", "possibly_sensitive": false, "public_metrics": {"retweet_count": 192, "reply_count": 9, "like_count": 1333, "quote_count": 2}}, {"entities": {"annotations": [{"start": 63, "end": 82, "probability": 0.9907, "type": "Person", "normalized_text": "David Foster Wallace"}, {"start": 88, "end": 103, "probability": 0.8899, "type": "Person", "normalized_text": "\u00d3scar Villasante"}], "mentions": [{"start": 28, "end": 42, "username": "camaraabierta", "id": "52823380"}], "urls": [{"start": 105, "end": 128, "url": "https://t.co/758gXJJsrc", "expanded_url": "https://www.youtube.com/watch?v=U9nkvtV8MMM&t=5s", "display_url": "youtube.com/watch?v=U9nkvt\u2026", "images": [{"url": "https://pbs.twimg.com/news_img/1473269052583915531/y4dzsT2L?format=jpg&name=orig", "width": 1280, "height": 720}, {"url": "https://pbs.twimg.com/news_img/1473269052583915531/y4dzsT2L?format=jpg&name=150x150", "width": 150, "height": 150}], "status": 200, "title": "Peque\u00f1o homenaje a David Foster Wallace", "description": "Dos grupos de jubilados charlando en una playa. El primero de temas muy intelectuales, el segundo de muy banales.", "unwound_url": "https://www.youtube.com/watch?v=U9nkvtV8MMM&t=5s"}]}, "source": "Twitter Web App", "conversation_id": "1473262330859429894", "text": "\u2728Premio al Mejor Documental\u2728@camaraabierta\n\"Peque\u00f1o Homenaje a David Foster Wallace\" de \u00d3scar Villasante\nhttps://t.co/758gXJJsrc", "created_at": "2021-12-21T12:29:26.000Z", "lang": "es", "in_reply_to_user_id": "161988497", "reply_settings": "everyone", "author_id": "161988497", "id": "1473269378565160974", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1473268624597168133"}], "public_metrics": {"retweet_count": 1, "reply_count": 0, "like_count": 2, "quote_count": 0}, "context_annotations": [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}]}, {"source": "Twitter for iPhone", "conversation_id": "1472984031864279045", "attachments": {"media_keys": ["3_1472987836517339136"]}, "entities": {"mentions": [{"start": 0, "end": 10, "username": "jdceulaer", "id": "292945752"}, {"start": 11, "end": 26, "username": "WimOosterlinck", "id": "53060760"}], "urls": [{"start": 49, "end": 72, "url": "https://t.co/pFDY3OjlEU", "expanded_url": "https://twitter.com/TomA3aenssens/status/1472987840288116740/photo/1", "display_url": "pic.twitter.com/pFDY3OjlEU"}]}, "text": "@jdceulaer @WimOosterlinck Ik gok op Bultinck.\n\ud83d\ude05 https://t.co/pFDY3OjlEU", "created_at": "2021-12-20T17:50:42.000Z", "lang": "nl", "in_reply_to_user_id": "292945752", "reply_settings": "everyone", "author_id": "1189576208", "id": "1472987840288116740", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1472984904023658502"}], "public_metrics": {"retweet_count": 0, "reply_count": 1, "like_count": 8, "quote_count": 0}, "context_annotations": [{"domain": {"id": "3", "name": "TV Shows", "description": "Television shows from around the world"}, "entity": {"id": "10029436825", "name": "Gok : le\u00e7ons de style"}}]}, {"source": "Twitter Web App", "conversation_id": "1472600656787742725", "entities": {"mentions": [{"start": 0, "end": 16, "username": "TheLastSisyphus", "id": "340072715"}]}, "text": "@TheLastSisyphus I read this one but unfortunately not what I need. I appreciate the link though, brother \ud83d\ude4f", "created_at": "2021-12-19T16:28:08.000Z", "lang": "en", "in_reply_to_user_id": "340072715", "reply_settings": "everyone", "author_id": "1357934563838812161", "id": "1472604673962037250", "possibly_sensitive": false, "referenced_tweets": [{"type": "replied_to", "id": "1472601979729981447"}], "public_metrics": {"retweet_count": 0, "reply_count": 1, "like_count": 1, "quote_count": 0}}, {"entities": {"annotations": [{"start": 114, "end": 130, "probability": 0.9954, "type": "Person", "normalized_text": "Alfredo Rodr\u00edguez"}, {"start": 173, "end": 209, "probability": 0.7925, "type": "Person", "normalized_text": "Kelly M Grandal Ana Teresa Rodr\u00edguez"}, {"start": 214, "end": 218, "probability": 0.5481, "type": "Person", "normalized_text": "Riera"}, {"start": 260, "end": 272, "probability": 0.9384, "type": "Person", "normalized_text": "Truman Capote"}], "mentions": [{"start": 18, "end": 25, "username": "Unimet", "id": "32280530"}, {"start": 155, "end": 171, "username": "Aglaia_Berlutti", "id": "45840741"}, {"start": 222, "end": 230, "username": "yoyiahu", "id": "62674070"}], "urls": [{"start": 274, "end": 297, "url": "https://t.co/c2y1pSLJCS", "expanded_url": "https://twitter.com/velazquera/status/1471895454103314433/photo/1", "display_url": "pic.twitter.com/c2y1pSLJCS"}]}, "source": "Twitter for Android", "conversation_id": "1471895454103314433", "attachments": {"media_keys": ["3_1471895448835215364"]}, "text": "Nueva publicaci\u00f3n @Unimet en f\u00edsico con ensayos sobre Literatura norteamericana\nGracias a nuestro editor profesor Alfredo Rodr\u00edguez y a la colaboraci\u00f3n de @Aglaia_Berlutti Kelly M Grandal Ana Teresa Rodr\u00edguez de Riera y @yoyiahu \nEl m\u00edo es sobre el terrible Truman Capote https://t.co/c2y1pSLJCS", "created_at": "2021-12-17T17:29:57.000Z", "lang": "es", "reply_settings": "everyone", "author_id": "796004142007324672", "id": "1471895454103314433", "possibly_sensitive": false, "public_metrics": {"retweet_count": 10, "reply_count": 3, "like_count": 31, "quote_count": 1}, "context_annotations": [{"domain": {"id": "65", "name": "Interests and Hobbies Vertical", "description": "Top level interests and hobbies groupings, like Food or Travel"}, "entity": {"id": "1280550787207147521", "name": "Arts & culture"}}]}, {"entities": {"annotations": [{"start": 31, "end": 50, "probability": 0.9629, "type": "Person", "normalized_text": "Paul Thomas Anderson"}, {"start": 65, "end": 68, "probability": 0.962, "type": "Person", "normalized_text": "Lynn"}, {"start": 77, "end": 90, "probability": 0.9166, "type": "Person", "normalized_text": "Ernie Anderson"}, {"start": 117, "end": 119, "probability": 0.8978, "type": "Organization", "normalized_text": "ABC"}, {"start": 122, "end": 130, "probability": 0.4358, "type": "Person", "normalized_text": "Ghoulardi"}, {"start": 135, "end": 143, "probability": 0.8304, "type": "Place", "normalized_text": "Cleveland"}, {"start": 178, "end": 181, "probability": 0.6344, "type": "Organization", "normalized_text": "WCVB"}], "mentions": [{"start": 0, "end": 9, "username": "JahHills", "id": "29092660"}]}, "source": "Twitter Web App", "conversation_id": "1471842268072095754", "text": "@JahHills I love the fact that Paul Thomas Anderson\u2019s father was Lynn native Ernie Anderson, the promo announcer for ABC, Ghoulardi in Cleveland and an announcer for the news on WCVB 5.", "created_at": "2021-12-17T13:58:36.000Z", "lang": "en", "in_reply_to_user_id": "29092660", "reply_settings": "everyone", "author_id": "1681097215", "id": "1471842268072095754", "possibly_sensitive": false, "public_metrics": {"retweet_count": 0, "reply_count": 1, "like_count": 2, "quote_count": 0}, "context_annotations": [{"domain": {"id": "45", "name": "Brand Vertical", "description": "Top level entities that describe a Brands industry"}, "entity": {"id": "781974597310615553", "name": "Entertainment"}}, {"domain": {"id": "46", "name": "Brand Category", "description": "Categories within Brand Verticals that narrow down the scope of Brands"}, "entity": {"id": "781974596157181956", "name": "Online Site"}}, {"domain": {"id": "46", "name": "Brand Category", "description": "Categories within Brand Verticals that narrow down the scope of Brands"}, "entity": {"id": "781974597105094656", "name": "TV/Movies Related"}}, {"domain": {"id": "47", "name": "Brand", "description": "Brands and Companies"}, "entity": {"id": "1065650820518051840", "name": "ABC News", "description": "ABC News"}}]}], "media": [{"type": "photo", "alt_text": "A close-up of a tennis ball resting on a tennis court in front of a net. ", "height": 442, "width": 789, "url": "https://pbs.twimg.com/media/FG0W1yhWYAI-B0D.jpg", "media_key": "3_1471857766570549250"}]}, "meta": {"newest_id": "1473826748169175048", "oldest_id": "1471808169630392327", "result_count": 13}, "__twarc": {"url": "https://api.twitter.com/2/tweets/search/recent?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ctext%2Cpossibly_sensitive%2Creferenced_tweets%2Creply_settings%2Csource%2Cwithheld&user.fields=created_at%2Cdescription%2Centities%2Cid%2Clocation%2Cname%2Cpinned_tweet_id%2Cprofile_image_url%2Cprotected%2Cpublic_metrics%2Curl%2Cusername%2Cverified%2Cwithheld&media.fields=alt_text%2Cduration_ms%2Cheight%2Cmedia_key%2Cpreview_image_url%2Ctype%2Curl%2Cwidth%2Cpublic_metrics&poll.fields=duration_minutes%2Cend_datetime%2Cid%2Coptions%2Cvoting_status&place.fields=contained_within%2Ccountry%2Ccountry_code%2Cfull_name%2Cgeo%2Cid%2Cname%2Cplace_type&query=David+Foster+Wallace+is%3Averified&max_results=100", "version": "2.8.2", "retrieved_at": "2021-12-23T14:36:34+00:00"}}

melaniewalsh/Intro-Cultural-AnalyticsThe tweets and tweet metadata above are being printed to the notebook. But we want to save this information to a file so we can work with it.

To output Twitter data to a file, we can also include a filename with the “.jsonl” file extension, which stands for JSON lines, a special kind of JSON file.

!twarc2 search "David Foster Wallace is:verified" twitter-data/dfw_last_week.jsonl

100%|██████████████████| Processed 6 days/6 days [00:00<00:00, 13 tweets total ]

Theoretically, a tweet with “David,” “Foster”, and “Wallace” in different places would be matched by the more general search above. If we wanted to match the words “David Foster Wallace” exactly, we would need to put “David Foster Wallace” in quotation marks and “escape” those quotation marks, so that twarc2 will know that our query shouldn’t end at the next quotation mark.

!twarc2 search "\"David Foster Wallace\" is:verified" twitter-data/dfw_exact.jsonl

100%|██████████████████| Processed 6 days/6 days [00:00<00:00, 13 tweets total ]

If you’re working on a Mac, you should be able to escape the quotation marks with backslashes \ before the characters, as shown in the example above. But if you’re working on a Windows computer, you may need to use triple quotations instead, for example:

twarc2 search """ "David Foster Wallace" is:verified""" twitter-data/dfw_exact.jsonl

Get Tweets (Academic Track, Full Twitter Archive)#

Attention

Remember that this functionality is only available to those who have an [Academic Research account](https://developer.twitter.com/en/products/twitter-api/academic-research).To collect tweets from Twitter’s entire historical archive, we need to add the --archive flag.

!twarc2 search "David Foster Wallace bro is:verified" --archive twitter-data/dfw_bro.jsonl

100%|██████████████| Processed 15 years/15 years [00:01<00:00, 30 tweets total ]

Convert JSONL to CSV#

To make our Twitter data easier to work with, we can convert our JSONL file to a CSV file with the twarc-csv plugin, which needs to be installed separately.

!pip install twarc-csv

Once installed, we can use the plug-in from twarc2 with the input filename for the JSONL and a desired output filename for the CSV file.

!twarc2 csv twitter-data/dfw_bro.jsonl twitter-data/dfw_bro.csv

100%|██████████████| Processed 83.7k/83.7k of input file [00:00<00:00, 2.51MB/s]

ℹ️

Parsed 30 tweets objects from 1 lines in the input file.

Wrote 30 rows and output 74 columns in the CSV.

By default, when converting from the JSONL file, twarc-csv will only include tweets that were directly returned from the search.

If you want you can also use --inline-referenced-tweets option to make “referenced” tweets into their own rows in the CSV file. For example, if a quote tweet matched our query, the tweet being quoted would also be included in the CSV file as its own row, even if it didn’t match our query. But as of v0.5.0 of twarc-csv this is no longer the default behavior.

Read in CSV#

Now we’re ready to explore the data!

To work with our tweet data, we can read in our CSV file with pandas and again parse the date column.

tweets_df = pd.read_csv('twitter-data/dfw_bro.csv',

parse_dates = ['created_at'])

If we scroll through this dataset, we can see that there are only 29 tweets that matched our search query, but there is a lot of metadata associated with each tweet. Scroll to the right to see all the information. What category surprises you the most? (For me, it’s the tweet author’s pinned tweet from their own timeline. Your pinned tweet gets attached to everything else you tweet!)

tweets_df

| id | conversation_id | referenced_tweets.replied_to.id | referenced_tweets.retweeted.id | referenced_tweets.quoted.id | author_id | in_reply_to_user_id | retweeted_user_id | quoted_user_id | created_at | text | lang | source | public_metrics.like_count | public_metrics.quote_count | public_metrics.reply_count | public_metrics.retweet_count | reply_settings | possibly_sensitive | withheld.scope | withheld.copyright | withheld.country_codes | entities.annotations | entities.cashtags | entities.hashtags | entities.mentions | entities.urls | context_annotations | attachments.media | attachments.media_keys | attachments.poll.duration_minutes | attachments.poll.end_datetime | attachments.poll.id | attachments.poll.options | attachments.poll.voting_status | attachments.poll_ids | author.id | author.created_at | author.username | author.name | author.description | author.entities.description.cashtags | author.entities.description.hashtags | author.entities.description.mentions | author.entities.description.urls | author.entities.url.urls | author.location | author.pinned_tweet_id | author.profile_image_url | author.protected | author.public_metrics.followers_count | author.public_metrics.following_count | author.public_metrics.listed_count | author.public_metrics.tweet_count | author.url | author.verified | author.withheld.scope | author.withheld.copyright | author.withheld.country_codes | geo.coordinates.coordinates | geo.coordinates.type | geo.country | geo.country_code | geo.full_name | geo.geo.bbox | geo.geo.type | geo.id | geo.name | geo.place_id | geo.place_type | __twarc.retrieved_at | __twarc.url | __twarc.version | Unnamed: 73 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1465671187497840642 | 1465671183370637315 | 1.465671e+18 | NaN | NaN | 78173848 | 78173848.0 | NaN | NaN | 2021-11-30 13:16:56+00:00 | It has little to do with the gender of the author, protagonist, etc. Olga Tokarczuk is just as appealing to male readers as David Foster Wallace (who is considered 'bro lit'). It seems to be more directly related, interestingly enough, to the postmodernist divide in literature. | en | Twitter Web App | 0 | 0 | 1 | 0 | everyone | False | NaN | NaN | NaN | [{"start": 69, "end": 82, "probability": 0.9898, "type": "Person", "normalized_text": "Olga Tokarczuk"}, {"start": 124, "end": 143, "probability": 0.9596, "type": "Person", "normalized_text": "David Foster Wallace"}] | NaN | NaN | NaN | NaN | [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070731935612198912", "name": "David Foster", "description": "Canadian musician, record producer, songwriter"}}, {"domain": {"id": "54", "name": "Musician", "description": "A musician in the world, like Adele or Bob Dylan"}, "entity": {"id": "1070731935612198912", "name... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 78173848 | 2009-09-29T00:58:33.000Z | TJCarpenterShow | Terry Wayne Carpenter, Jr. | Writer; shitposting. | NaN | NaN | NaN | NaN | NaN | Denver, CO | 1.263100e+18 | https://pbs.twimg.com/profile_images/1360259928091283457/REH2l3d-_normal.jpg | False | 7493 | 170 | 205 | 104059 | NaN | True | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |

| 1 | 1412360883582418945 | 1412043478301880331 | 1.412361e+18 | NaN | NaN | 342644076 | 82979289.0 | NaN | NaN | 2021-07-06 10:40:49+00:00 | @TomKealy2 @aveek18 @maybeavalon Imo the great twitter literature injustice is that it is david foster wallace and not him that is smeared as the dumb bro contemporary author | en | Twitter Web App | 2 | 0 | 2 | 0 | everyone | False | NaN | NaN | NaN | [{"start": 90, "end": 109, "probability": 0.89, "type": "Person", "normalized_text": "david foster wallace"}] | NaN | NaN | [{"start": 0, "end": 10, "username": "TomKealy2", "id": "82979289", "created_at": "2009-10-16T21:36:07.000Z", "description": "Digital Writer.", "public_metrics": {"followers_count": 1519, "following_count": 1291, "tweet_count": 65190, "listed_count": 25}, "protected": false, "location": "Berlin, Germany", "name": "TomKealy 1\ufe0f\u20e34\ufe0f\u20e39\ufe0f\u20e3\ud83d\udea2", "verified": false... | NaN | [{"domain": {"id": "46", "name": "Brand Category", "description": "Categories within Brand Verticals that narrow down the scope of Brands"}, "entity": {"id": "781974596752842752", "name": "Services"}}, {"domain": {"id": "47", "name": "Brand", "description": "Brands and Companies"}, "entity": {"id": "10045225402", "name": "Twitter"}}] | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 342644076 | 2011-07-26T10:41:54.000Z | JeyyLowe | Josh Lowe | Policy-focused content guy. (Mostly) ex-journalist. Mediating between evidence and action at global data institute for child safety @EdinburghUni. Views mine. | NaN | NaN | [{"start": 132, "end": 145, "username": "EdinburghUni"}] | NaN | [{"start": 0, "end": 23, "url": "https://t.co/HAkzf1bnPR", "expanded_url": "https://www.ed.ac.uk/impact/research/digital-life/making-data-count-against-child-abuse", "display_url": "ed.ac.uk/impact/researc\u2026"}] | London, England | 1.461288e+18 | https://pbs.twimg.com/profile_images/1441561506634633221/qWUTnkEs_normal.jpg | False | 6190 | 3701 | 129 | 68000 | https://t.co/HAkzf1bnPR | True | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |

| 2 | 1408833479304003589 | 1408773437431091202 | 1.408819e+18 | NaN | NaN | 15639774 | 4282171.0 | NaN | NaN | 2021-06-26 17:04:10+00:00 | @page88 @IngrahamAngle I heard a tech bro quote a line about cruise ship wait staff from David Foster Wallace's A Supposedly Fun Thing and said it showed how DFW really believed in great customer service. | en | TweetDeck | 11 | 0 | 1 | 0 | everyone | False | NaN | NaN | NaN | [{"start": 89, "end": 108, "probability": 0.9731, "type": "Person", "normalized_text": "David Foster Wallace"}, {"start": 158, "end": 160, "probability": 0.5814, "type": "Place", "normalized_text": "DFW"}] | NaN | NaN | [{"start": 0, "end": 7, "username": "page88", "id": "4282171", "created_at": "2007-04-12T02:18:44.000Z", "description": "Columnist @WIRED & host of THIS IS CRITICAL @Stitcher. Sometimes @latimesopinion, @TheAtlantic, @nytimes. PhD fwiw. Join us at @thiscriticalpod.", "public_metrics": {"followers_count": 150610, "following_count": 4024, "tweet_count": 53115, "listed_count": 2427}, "protected"... | NaN | [{"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1053753085489606656", "name": "Laura Ingraham", "description": "Laura Ingraham"}}, {"domain": {"id": "10", "name": "Person", "description": "Named people in the world like Nelson Mandela"}, "entity": {"id": "1070711281076715520", "name": "Virginia Heffernan", "descripti... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 15639774 | 2008-07-29T00:34:43.000Z | adamdavidson | Adam Davidson | Sharing the lessons I’ve learned from @ThisAmerLife @NewYorker and my baby, @planetmoney on how to make storytelling your superpower at https://t.co/QyMS00u10R | NaN | NaN | [{"start": 38, "end": 51, "username": "ThisAmerLife"}, {"start": 52, "end": 62, "username": "NewYorker"}, {"start": 76, "end": 88, "username": "planetmoney"}] | [{"start": 136, "end": 159, "url": "https://t.co/QyMS00u10R", "expanded_url": "http://www.masterfulstory.com", "display_url": "masterfulstory.com"}] | [{"start": 0, "end": 23, "url": "https://t.co/P16tJd6SDa", "expanded_url": "https://www.masterfulstory.com/", "display_url": "masterfulstory.com"}] | Charlotte, VT | 1.462433e+18 | https://pbs.twimg.com/profile_images/793622420364165120/em1s6fZT_normal.jpg | False | 118449 | 2267 | 2137 | 9323 | https://t.co/P16tJd6SDa | True | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |

| 3 | 1313590839977947136 | 1313590839977947136 | NaN | 1.313523e+18 | NaN | 602060212 | NaN | 602060212.0 | NaN | 2020-10-06 21:23:56+00:00 | the guy who publicly makes a display of how much feminist literature he reads and waxes on how important bookshelf representation is at least 50% more likely to be a sexpest than the bro who just likes to vibe about david foster wallace and hemingway. this is science. https://t.co/QsvsVxBY15 | en | Twitter Web App | 98 | 1 | 2 | 12 | everyone | False | NaN | NaN | NaN | [{"start": 216, "end": 235, "probability": 0.9361, "type": "Person", "normalized_text": "david foster wallace"}, {"start": 241, "end": 249, "probability": 0.6625, "type": "Person", "normalized_text": "hemingway"}] | NaN | NaN | NaN | [{"start": 269, "end": 292, "url": "https://t.co/QsvsVxBY15", "expanded_url": "https://twitter.com/ebruenig/status/1313522459899985922", "display_url": "twitter.com/ebruenig/statu\u2026"}] | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 602060212 | 2012-06-07T17:38:18.000Z | ben_geier | Goy Division/Jew Order | Journalist, writer, Brooklyn trash. We’re all hypocrites, but you’re a patriot. Married to my wife, dad to a baby. | NaN | NaN | NaN | NaN | NaN | Brooklyn, NY | 7.233185e+17 | https://pbs.twimg.com/profile_images/1182430150920691712/Yt8LKrXl_normal.jpg | False | 12596 | 2981 | 128 | 47822 | NaN | False | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |

| 4 | 1313523086424186881 | 1313523086424186881 | NaN | NaN | NaN | 602060212 | NaN | NaN | NaN | 2020-10-06 16:54:42+00:00 | the guy who publicly makes a display of how much feminist literature he reads and waxes on how important bookshelf representation is at least 50% more likely to be a sexpest than the bro who just likes to vibe about david foster wallace and hemingway. this is science. https://t.co/QsvsVxBY15 | en | Twitter Web App | 98 | 1 | 2 | 12 | everyone | False | NaN | NaN | NaN | [{"start": 216, "end": 235, "probability": 0.9361, "type": "Person", "normalized_text": "david foster wallace"}, {"start": 241, "end": 249, "probability": 0.6625, "type": "Person", "normalized_text": "hemingway"}] | NaN | NaN | NaN | [{"start": 269, "end": 292, "url": "https://t.co/QsvsVxBY15", "expanded_url": "https://twitter.com/ebruenig/status/1313522459899985922", "display_url": "twitter.com/ebruenig/statu\u2026"}] | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 602060212 | 2012-06-07T17:38:18.000Z | ben_geier | Goy Division/Jew Order | Journalist, writer, Brooklyn trash. We’re all hypocrites, but you’re a patriot. Married to my wife, dad to a baby. | NaN | NaN | NaN | NaN | NaN | Brooklyn, NY | 7.233185e+17 | https://pbs.twimg.com/profile_images/1182430150920691712/Yt8LKrXl_normal.jpg | False | 12596 | 2981 | 128 | 47822 | NaN | False | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |

| 5 | 1298060678432010240 | 1298060678432010240 | NaN | 1.297936e+18 | NaN | 23314192 | NaN | 236999725.0 | NaN | 2020-08-25 00:52:37+00:00 | david foster wallace bro conversation again? i feel like by this point in the discourse i need footnotes! (just a little "insider" dfw humor for ya) | en | Twitter Web App | 407 | 1 | 4 | 23 | everyone | False | NaN | NaN | NaN | [{"start": 0, "end": 19, "probability": 0.7756, "type": "Person", "normalized_text": "david foster wallace"}] | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 23314192 | 2009-03-08T14:49:07.000Z | chrisdeville | Chris DeVille | Managing Editor, Stereogum // chris at stereogum dot com | NaN | NaN | NaN | NaN | [{"start": 0, "end": 23, "url": "https://t.co/3jEZqP2it0", "expanded_url": "http://www.stereogum.com", "display_url": "stereogum.com"}] | Columbus | 1.438875e+18 | https://pbs.twimg.com/profile_images/378800000054104895/202f3a3d00921313b1da14a88b0733c9_normal.jpeg | False | 14133 | 995 | 271 | 23755 | https://t.co/3jEZqP2it0 | True | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 2021-12-23T14:37:25+00:00 | https://api.twitter.com/2/tweets/search/all?expansions=author_id%2Cin_reply_to_user_id%2Creferenced_tweets.id%2Creferenced_tweets.id.author_id%2Centities.mentions.username%2Cattachments.poll_ids%2Cattachments.media_keys%2Cgeo.place_id&tweet.fields=attachments%2Cauthor_id%2Ccontext_annotations%2Cconversation_id%2Ccreated_at%2Centities%2Cgeo%2Cid%2Cin_reply_to_user_id%2Clang%2Cpublic_metrics%2Ct... | 2.8.2 | NaN |